Architectural visualization by game design

Augmented Reality

Find your Augmented mascot! 2021, United Kingdom, London

A participatory urban AR supported by spatial and haptic cognition.

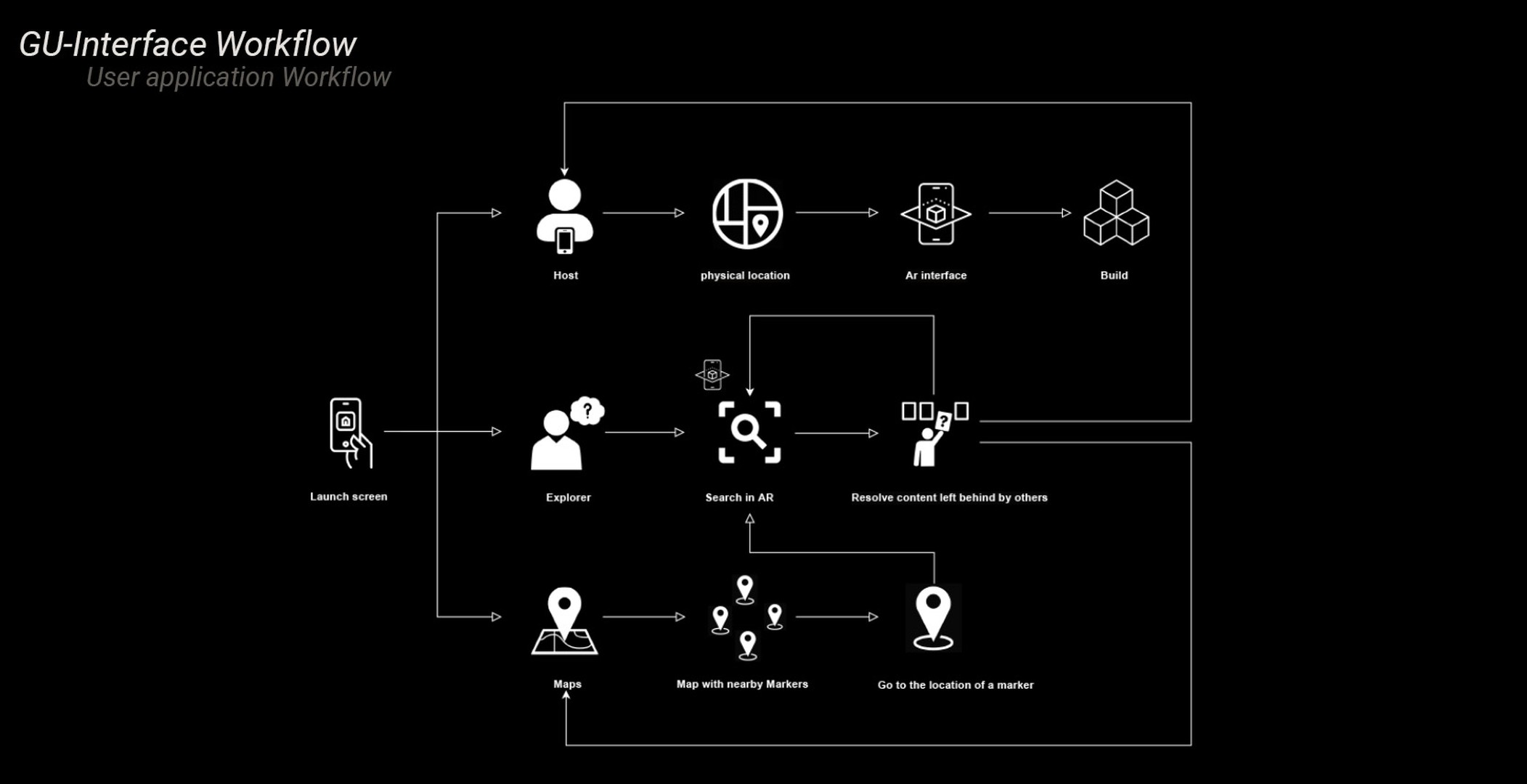

The game involves a host, who builds augmented blocks on the preferred locations. Then the players who resolve are spread across in search of these augmented blocks. To facilitate them, maps are provided with markers and guide images to ease the navigation. The three key interfaces that construct the game are : •Build & Host interface: where the host builds Augmented objects on the space of his interest. •Map interface: where the player gets hints and help to navigate to the Augmented object. •Explore interface: where the player can explore various places of possible interventions. Overall, tweaking the game to get specific information in the backend reveals data about many conscious, unconscious decisions and behaviours exhibited by participants during the gameplay which can be interpreted to find meaningful information about human cognition on spatiality and haptics.

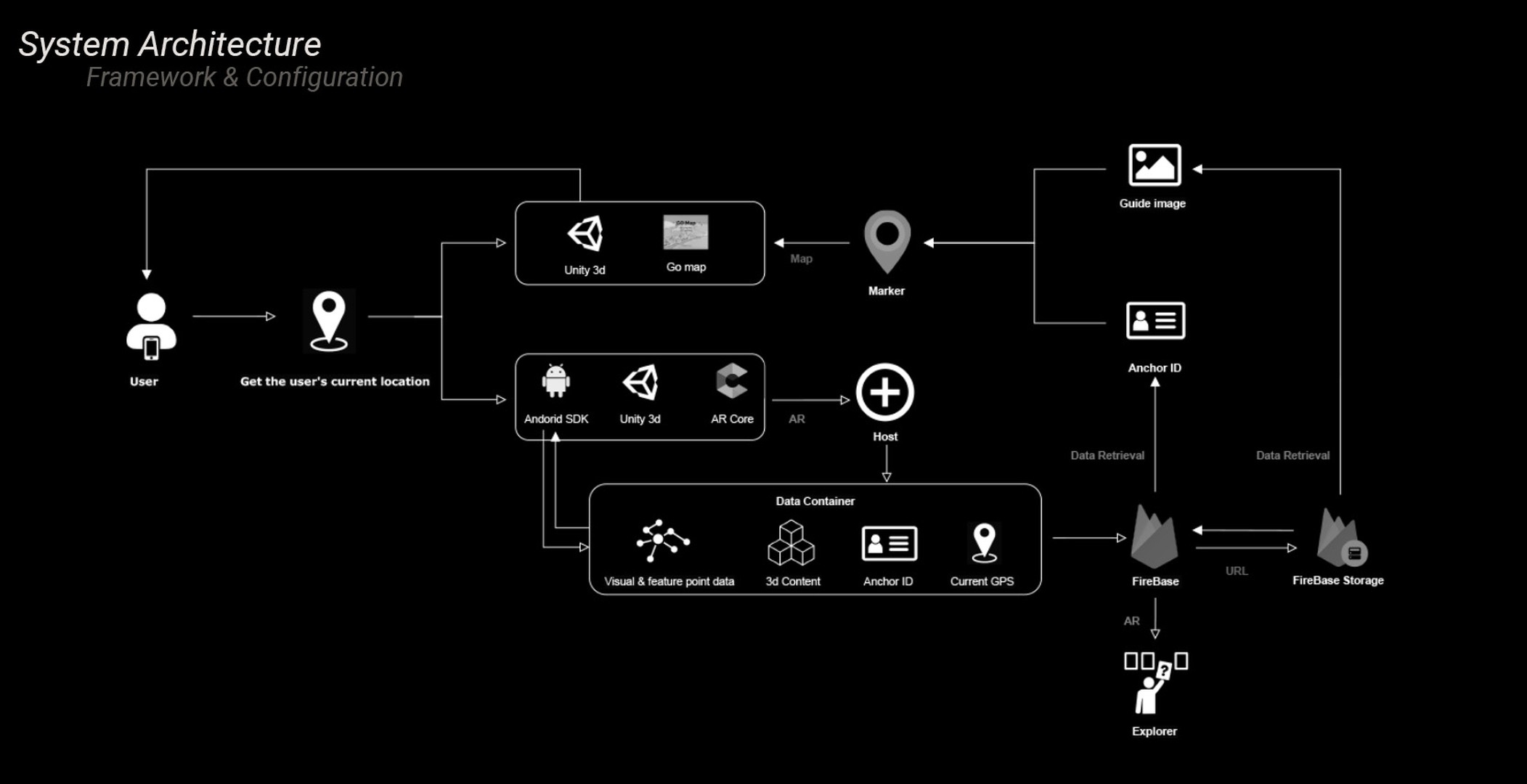

This project explores playful ways to support participatory urbanism through an AR digital platforms. The aim is to strengthen citizen’s digital rights, and support crowd intelligence and co-creation through spatial and haptic cognition. A study was carried out to explore how participants perceive, accept, host, and resolve Augmented objects and their cognition of the same concerning the Location-based system. The human mind is very complex to completely understand its nature of cognition. The user's spatial cognition for this is influenced by several factors. Spatial memory, spatial comprehension, and visual fixation are major factors influencing the spatial cognition of the user. The other focus is on haptic cognition referring to the feel and sense of touch, vibration, movement, etc. Can the user's cognition of space and haptic help us to get a clearer picture of their behavioural study and answer the hypotheses that revolve around human cognition and their unconscious behavioural responses. To understand the effect of cognition on spatiality and haptic interfaces, we created a game to study and observe the effects of different cognitive factors by tweaking the game and its interface. The game was aimed to intrigue the user to find an Augmented object with support maps and guides to locate them. The immediate response, users cognition of the space and interface, the way of approach, the weightage of importance to physical and virtual, the excitement of finding the Augmented reality object, all such responses were studied. These findings further helped us to better the different interfaces of the game, their features, and understanding of behaviour and cognition behind them. The study focuses on : Spatial memory: The ability to store information about a space. Spatial Comprehension: The ability to understand a space. Visual fixation: The visual level of focus a person has on a project. Hosting objects involves obtaining the visual data and feature point via device camera, current GPS, the host model after the player constructs his desired 3D content is packaged and culminated with the unique anchor ID which, as a dataset are stored at the Firebase cloud Storage, this marks the end of hosting side. The game continuously monitors the device location to render the markers of neighbourhood objects with the help of the cloud servers filtering the previous dataset for nearby contents. Also, a guide image is displayed upon request representing the chosen marker. Resolving objects are rendered to the user upon encountering the physical space when the visual data is presented before the AR-camera in the explore interface. On the whole, tweaking the game to get specific information in the backend reveals data about many conscious, unconscious decisions and behaviours exhibited by participants during the gameplay which can be interpreted to find meaningful information about human cognition on spatiality and haptics.

Here but when: The archive across space and time 2020, United Kingdom, London

An interactive network of spatial information augmenting the present configuration with ones from the past through various levels of accessibility.

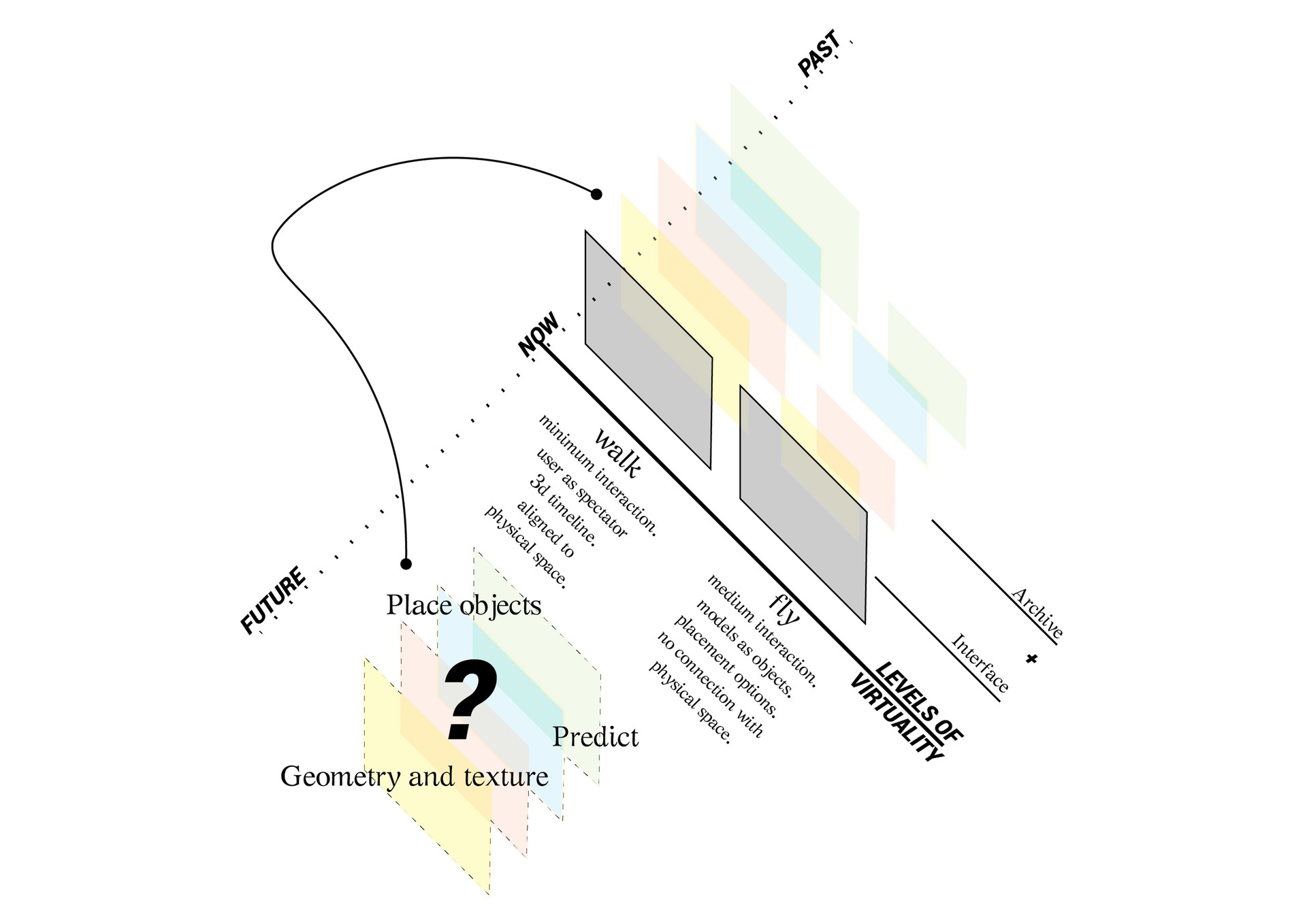

The immersive environment is developed using the gaming engine ‘Unity’ and Vuforia SDK, where the 3D scans are rendered as meshes and they are visualised on a sequence based on time periods. The interface is formed with an augmented reality mobile application which creates a gradual transition of virtuality between the time periods. The key part of its functionalities is the Time Machine, a two-dimensional graphic element which allows the user to navigate through older 3D scans of the space in the specific location. Additionally, the archive is accessible through “spots of information”. The discrete objects comprising all the 3D scans, have their own local sub-archive: organised and catalogued information about their past states. This local information is only visible when the user is near the objects.

Visual Concept: Comprehension of the connection between space and time is a potential challenge to overcome with the existing devices used to record and represent spatial information. A sequential representation of the space in relation to time, retrieved from an organized archive, can help us better comprehend this link. This can also unfold the possibility of rendering the memory in different perspectives to different users depending on their location inside the space and the time they recall from the archive.

Can we use the real-time depiction as a dynamic tool for recording spatial memory through media traditionally used to represent space? Cultures have always been connected to technical life as humans have been extending their capabilities through technology. However, in the information society, interfaces, which enmesh our work in so-called real time, are dominating our everyday lives, while at the same time our online activity is constantly being stored in a dynamic archive. This research project explores the connection of archive to time and spatial memory. It focuses on the context of contemporary technical apparatuses of recording through 3d scanning, an example of a spatial digital replication tool.

The project investigates the above question through the development of an archive of 3d scanning models which can be dynamically updated by data from users. An interactive network of spatial information is created, highlighting the importance of tool-based fusion, level of accessibility and experience. It outlines the qualities of organising and visualising data, in order to build, on a degree, collective memory. The 3D scans used in the interface consist of two basic types of information: position and colour, which after performing some basic operations are expressed as computational geometry and texture respectively. These features are directly linked to the time parameter as they are affected by lightning conditions, reflections and all changes which affect spatial visuality in general. As Derrida explains “ideal Objectivity is not fully constituted” or in other words in order information to be sustained through time it must be able to be incarnated in a transmissible form and be organised in a way to be readable and graspable. The compilation of a certain amount of information in one platform forms an archive, or in our case, collective knowledge based on an information distribution infrastructure. This interactive archive enhances human experience augmenting the present spatial configuration with the ones in the past. The 3D scans are overlayed in the physical environment and can be viewed in a sequence based on the date/time they were captured. It’s flexible for scale adjustments and general functionality. Apart from the room scale, other potential applications could be a museum for navigating through past exhibitions or a construction site exploring the progress of the work.

Virtual Reality

Creating virtual reality using Revit template project in Stingray via 3ds max , I have explored the use of Stingray to compress the game design along with its different levels into a portable and executable ".exe" file format. Animation has been created in 3ds max and hooked up into Stingray with triggers to perform specific actions. I would request you to download the file from the link below and try it with a virtual reality headset.